Image by Author

# Introduction

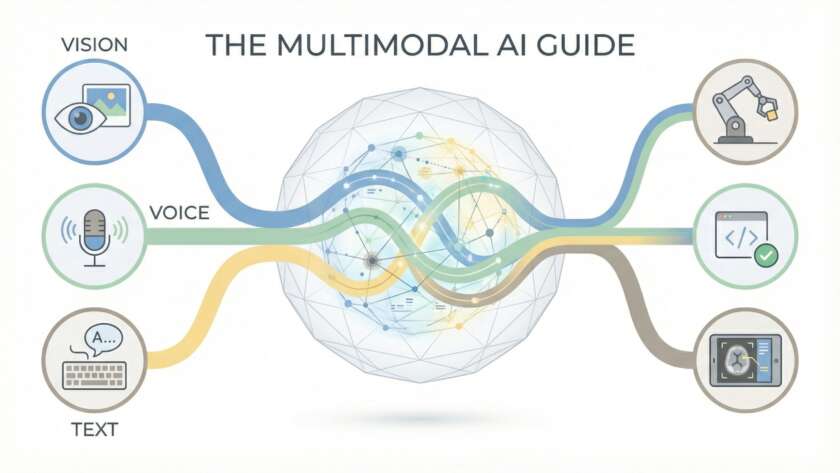

You’ve probably heard people talk about APIs a lot. Basically, an API allows a software to ask another piece of software for help. For example, when we use our weather app, it might use a real-time API to get the data from a remote server. This little conversation…